Aurora

When RL Meets Adaptive Speculative Training: A Unified Training-Serving System

Abstract

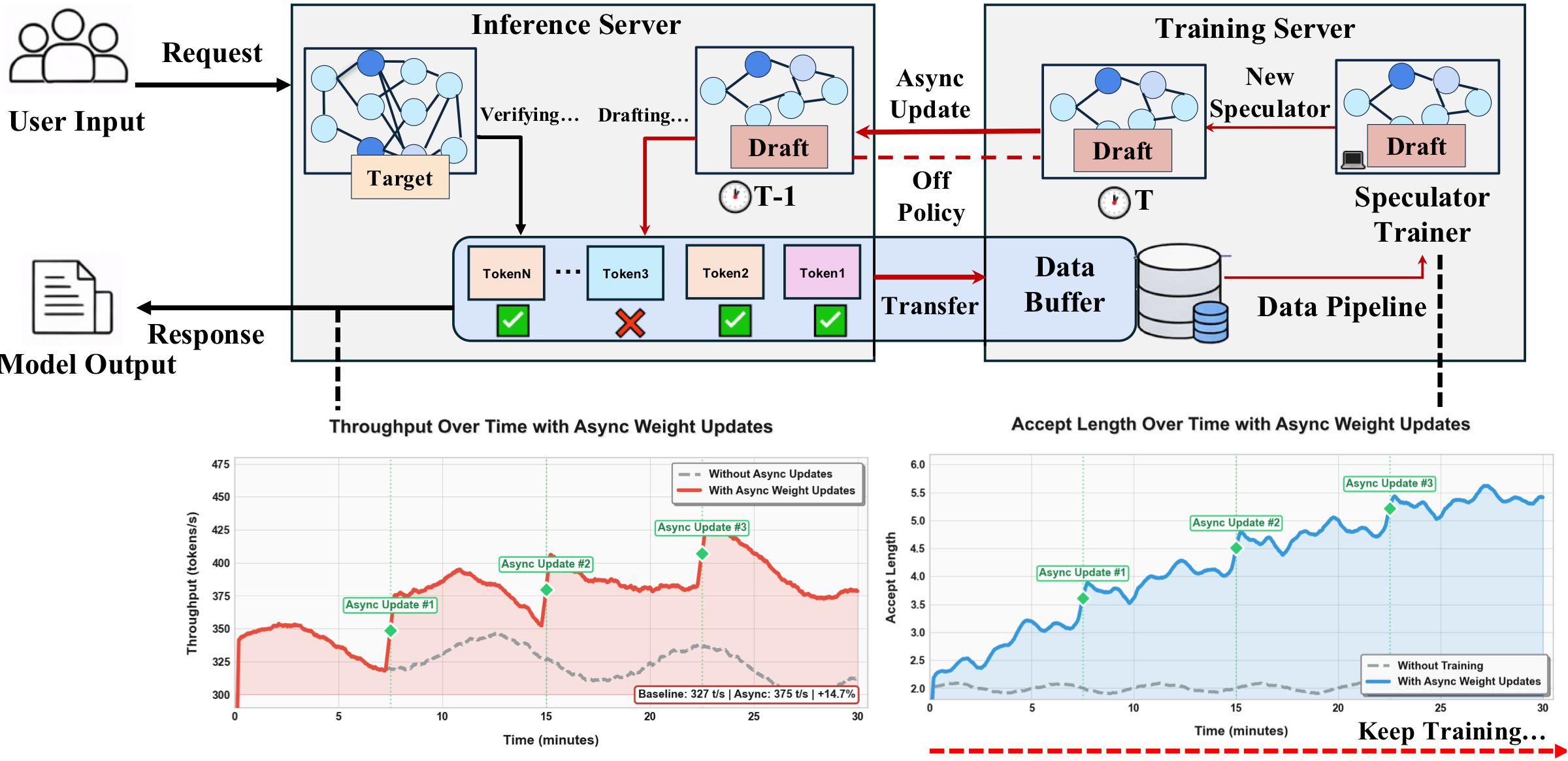

Speculative decoding can significantly accelerate LLM serving, but its real-world benefits often erode due to training-serving mismatch and non-stationary traffic. Unlike previous systems that decouple speculator training from inference, we present a unified training-serving system, Aurora, that closes this loop by continuously learning a speculator model directly from live inference traces. Our design integrates an SGLang-based inference server with an asynchronous training server connected via efficient GPU-to-GPU RPC, enabling hot-swapped speculator updates without service interruption.

Crucially, our system supports day-0 deployment: a speculator can be served immediately and quickly adapted on live traffic, improving overall system throughput. This paradigm shift enables us to frame the training-serving loop as an asynchronous reinforcement learning process and allows us to leverage rejected tokens from the speculator to improve sampling efficiency. Our experiments show that this unified system achieves a 1.33× speedup in the mixed-data scenario when starting from scratch speculator, and a 1.48× speedup starting from static speculator. We also find that the system adapts more effectively to distribution shifts in user traffic, delivering a 1.25× speedup over a well-trained but static speculator.

Motivation

Most speculative decoding deployments follow a two-stage pipeline: offline training and separate serving. This separation introduces systematic challenges that limit real-world performance:

1. Training-Serving Mismatch Gap

Offline drafter training optimizes acceptance in a controlled setting, but production speedups depend on deployment stack details—kernel implementations, numeric precision (FP8/FP4), batching, and scheduling. A drafter that appears strong offline may realize materially lower acceptance once integrated into production.

2. Moving-Target Gap from Verifier Drift

Target models are updated frequently for quality, safety, cost, or hardware migration. However, the drafter is typically refreshed on a slower cadence due to retraining cost and pipeline complexity. This creates significant staleness and degrades speculative performance.

3. High Infrastructure Cost

Off-policy distillation pipelines often require collecting large volumes of target model activations or signals. At production scale, the storage footprint can reach petabyte-level magnitude, with high cost in memory, bandwidth, and operational complexity.

System Architecture

Aurora consists of two primary, decoupled components:

Inference Server

Runs an SGLang speculative decoding engine with a target model and a draft model. For each request, the draft model proposes a sequence of tokens, which are then verified in parallel by the target model. The outcomes—both accepted and rejected tokens—are streamed to a distributed data buffer.

Training Server

Operates asynchronously, fetching batches of training data from the data buffer and performing gradient updates on a copy of the draft model. Once a new, improved speculator is ready, its weights are asynchronously pushed back to the Inference Server via hot-swap, without downtime or service interruption.

This decoupled, asynchronous architecture allows the training process to run continuously on dedicated resources while the inference process remains highly responsive and optimized for low-latency serving.

Method

Online Speculator Training as Asynchronous RL

We view online speculative decoding as an asynchronous reinforcement learning system. The draft model acts as the policy π, and the target model plus verifier implement the environment. Each speculative step forms a short episode: the policy proposes a tree of candidate continuations, the verifier accepts a prefix, and the outcome provides structured feedback. Accepted tokens correspond to positive reward, while rejected tokens provide zero/negative reward.

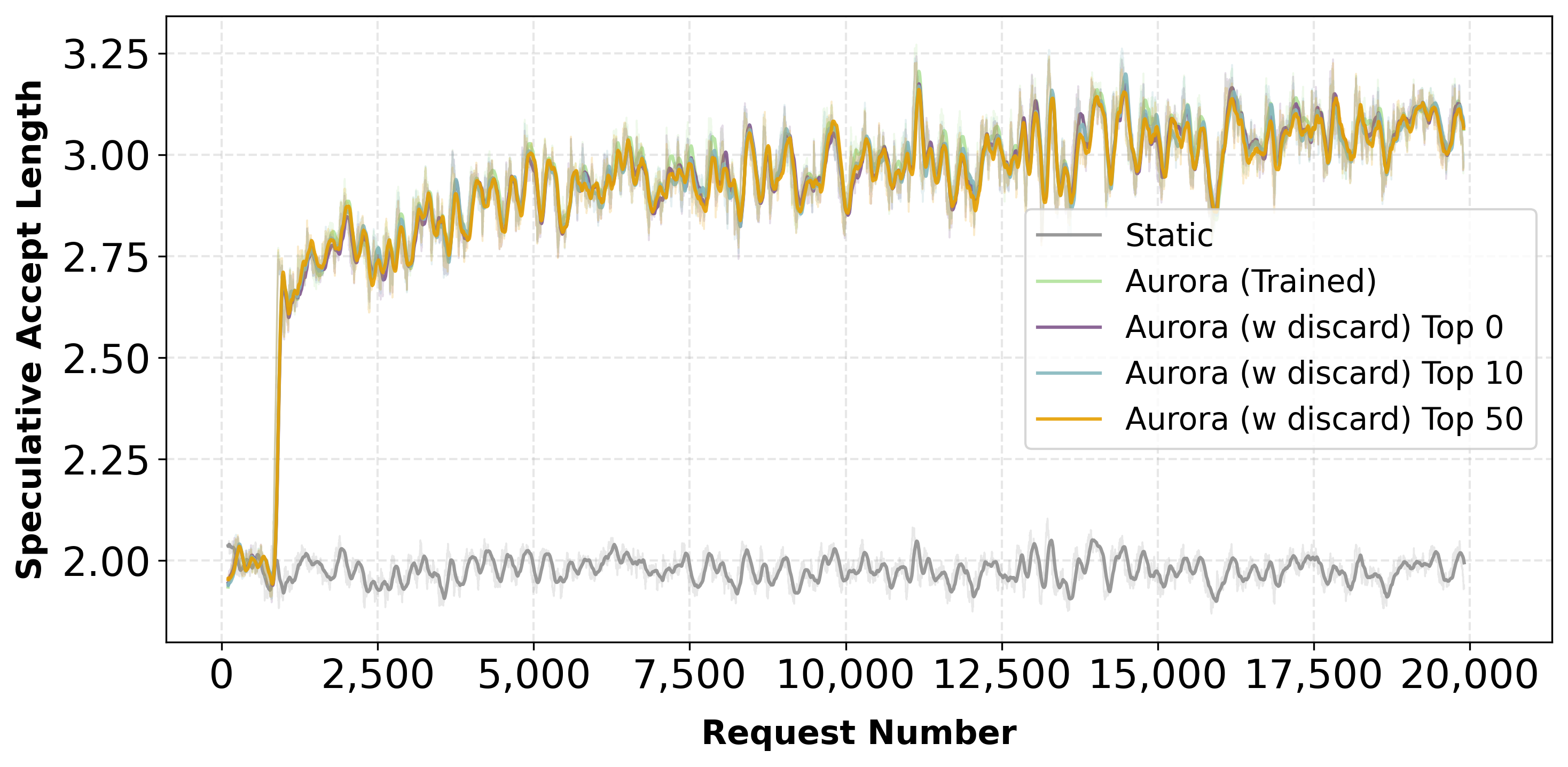

Learning from Acceptance and Rejection

Verification yields richer supervision than acceptance-only imitation. We train the draft model with two complementary signals:

- Acceptance loss (imitation): Cross-entropy on accepted tokens, encouraging the draft to reproduce verifier-approved continuations.

- Rejection loss (counterfactual feedback): Rejected branches specify what the policy should not propose. With Discard Sampling, we apply a KL-based objective that pushes probability mass away from incorrect predictions.

The total loss is a weighted combination:

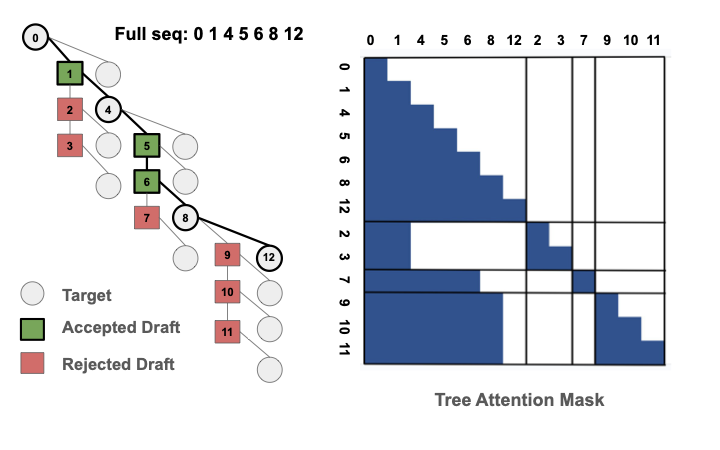

Efficient Tree Attention

We employ a specialized Tree Attention mechanism to efficiently process the complex branching structure of speculative decoding results. By constructing a custom attention mask that respects the causal structure of the speculative tree, we can process all accepted and rejected branches in a single batched forward and backward pass.

Tree Attention mechanism for efficient processing of speculative decoding branches

Experimental Results

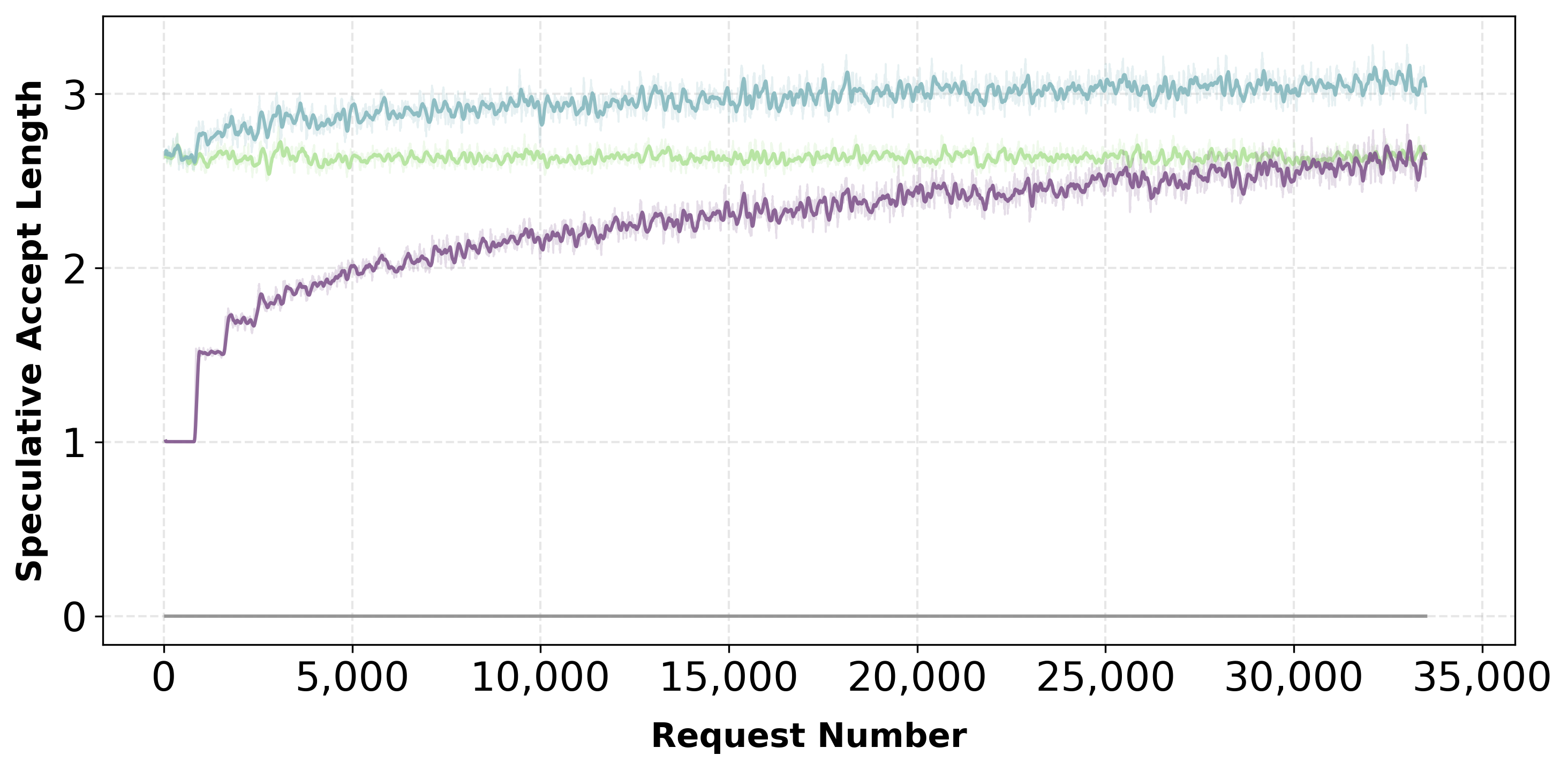

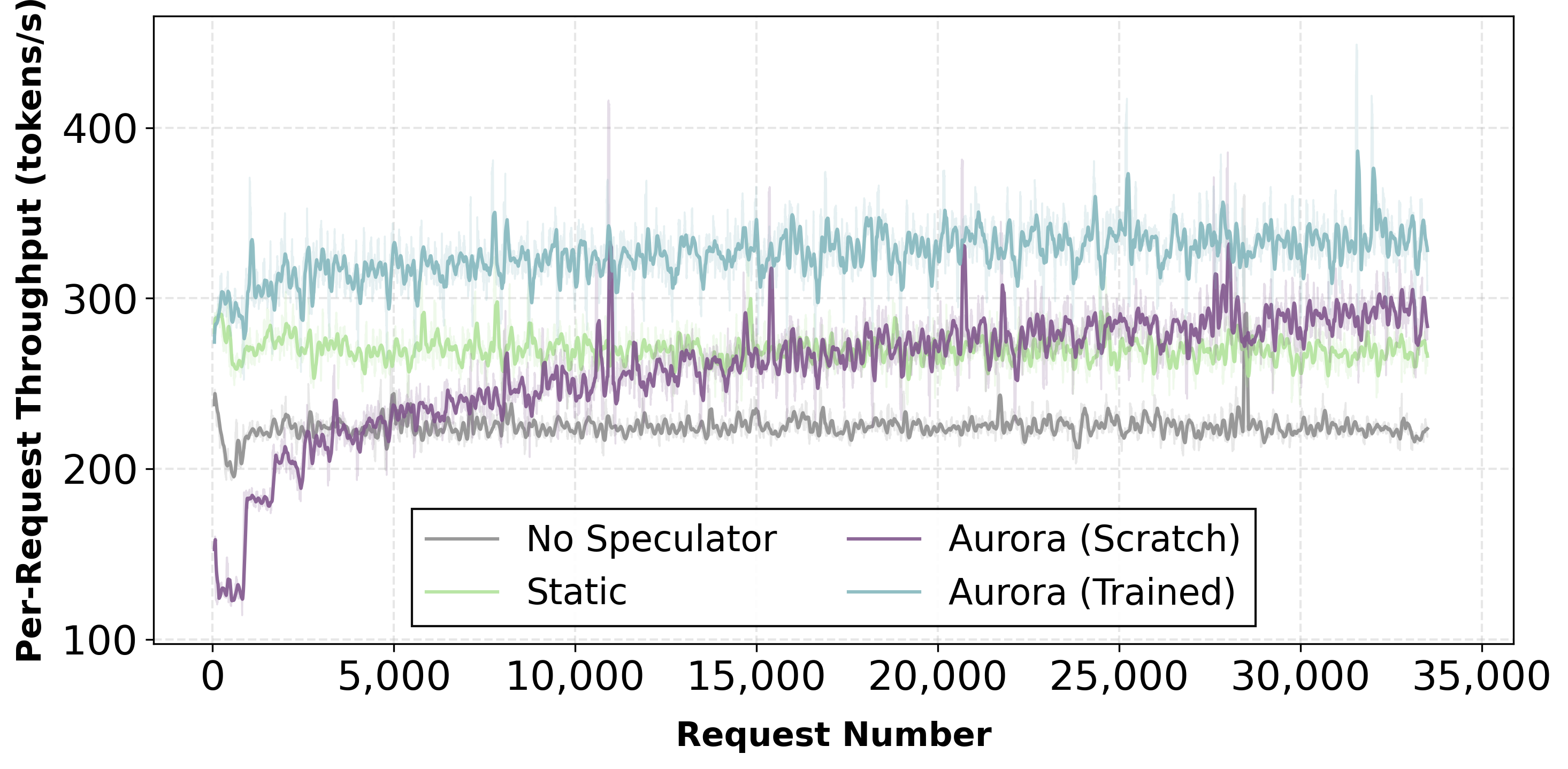

Day-0 Deployment: Training from Scratch

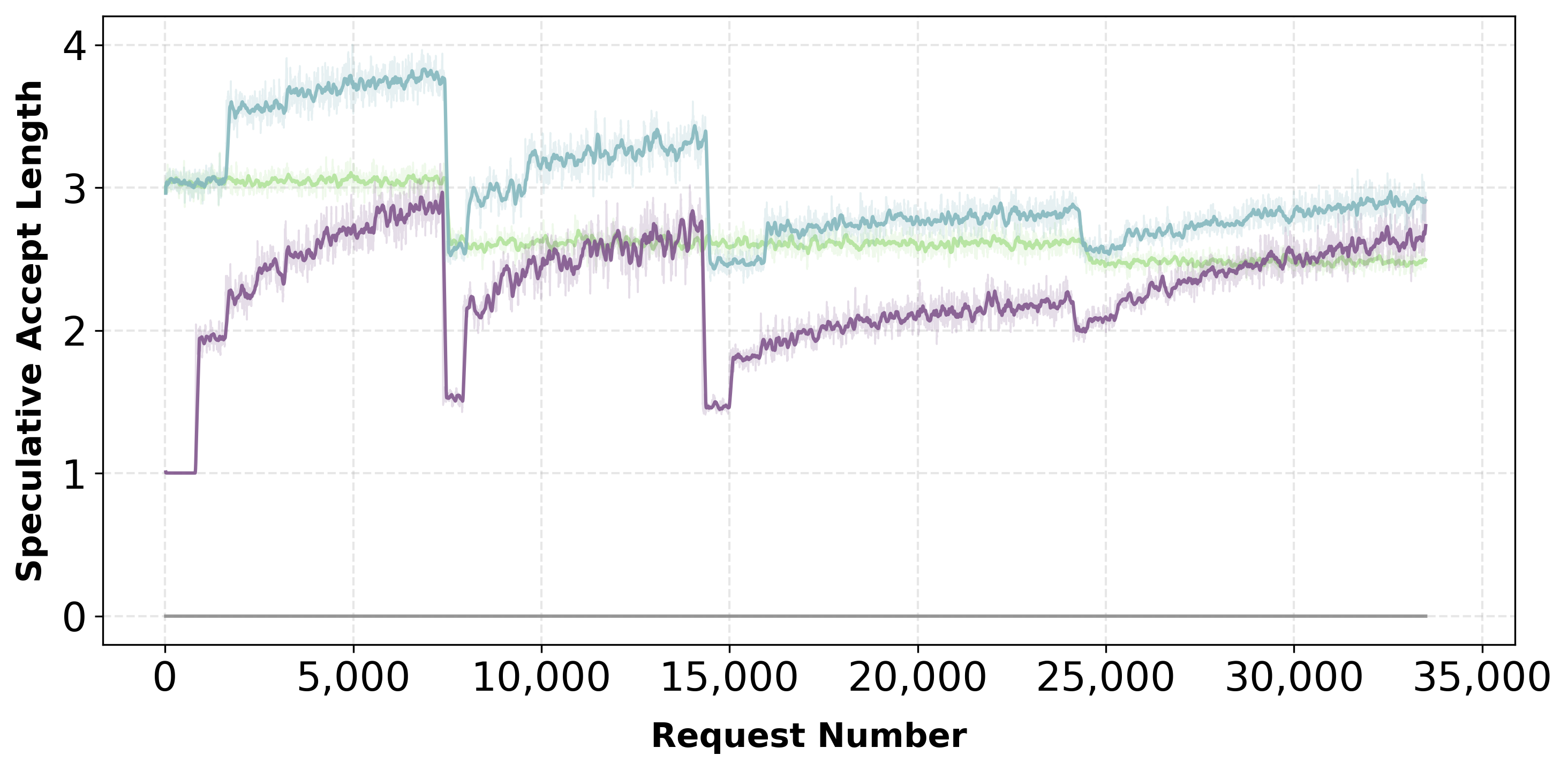

Aurora enables day-0 serving: a completely untrained speculator can be deployed immediately and become production-ready through online adaptation alone. In mixed traffic scenarios, acceptance length reaches competitive levels rapidly.

Acceptance length improves rapidly from random initialization

Throughput stabilizes at 302.3 tokens/s

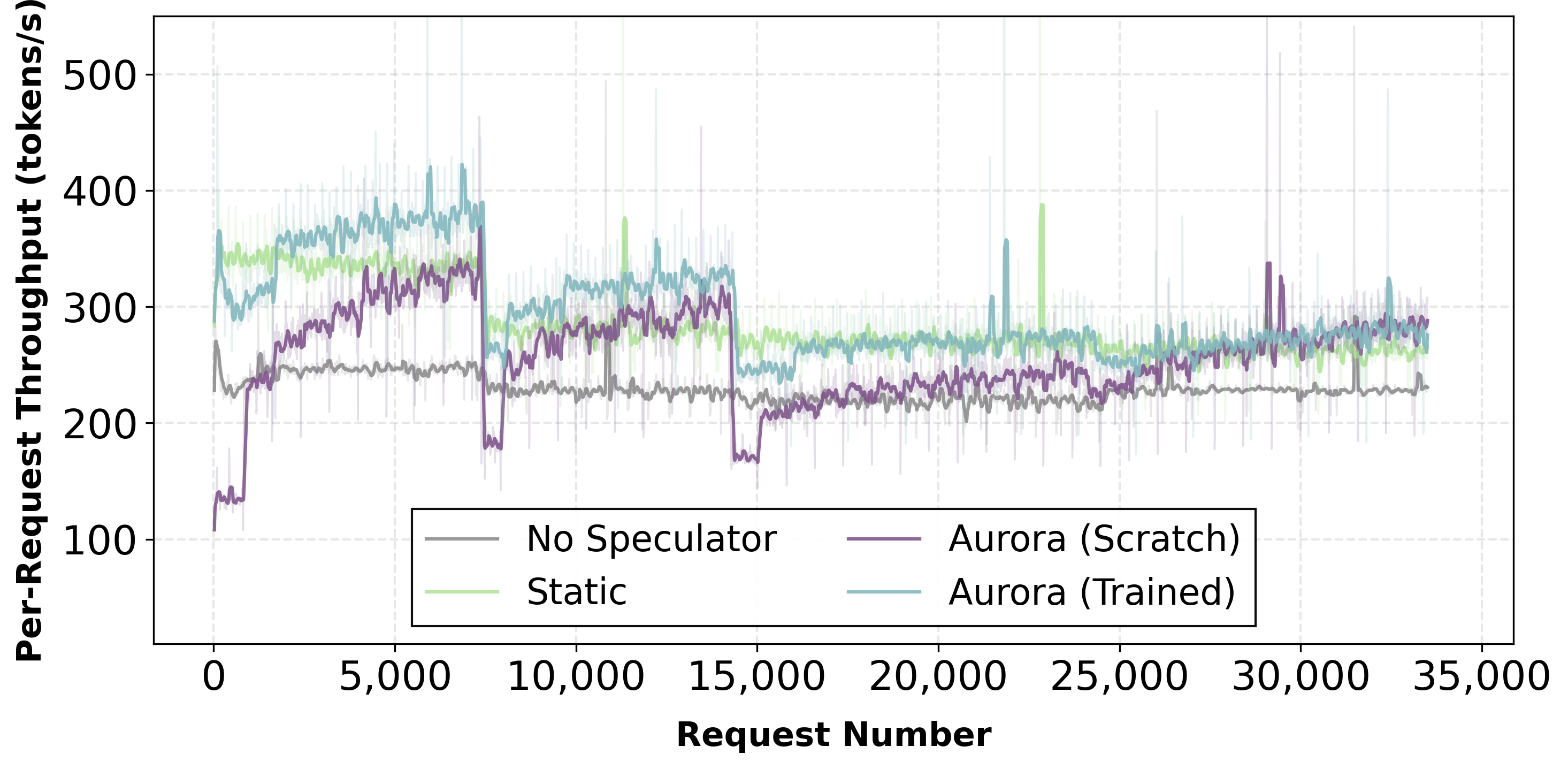

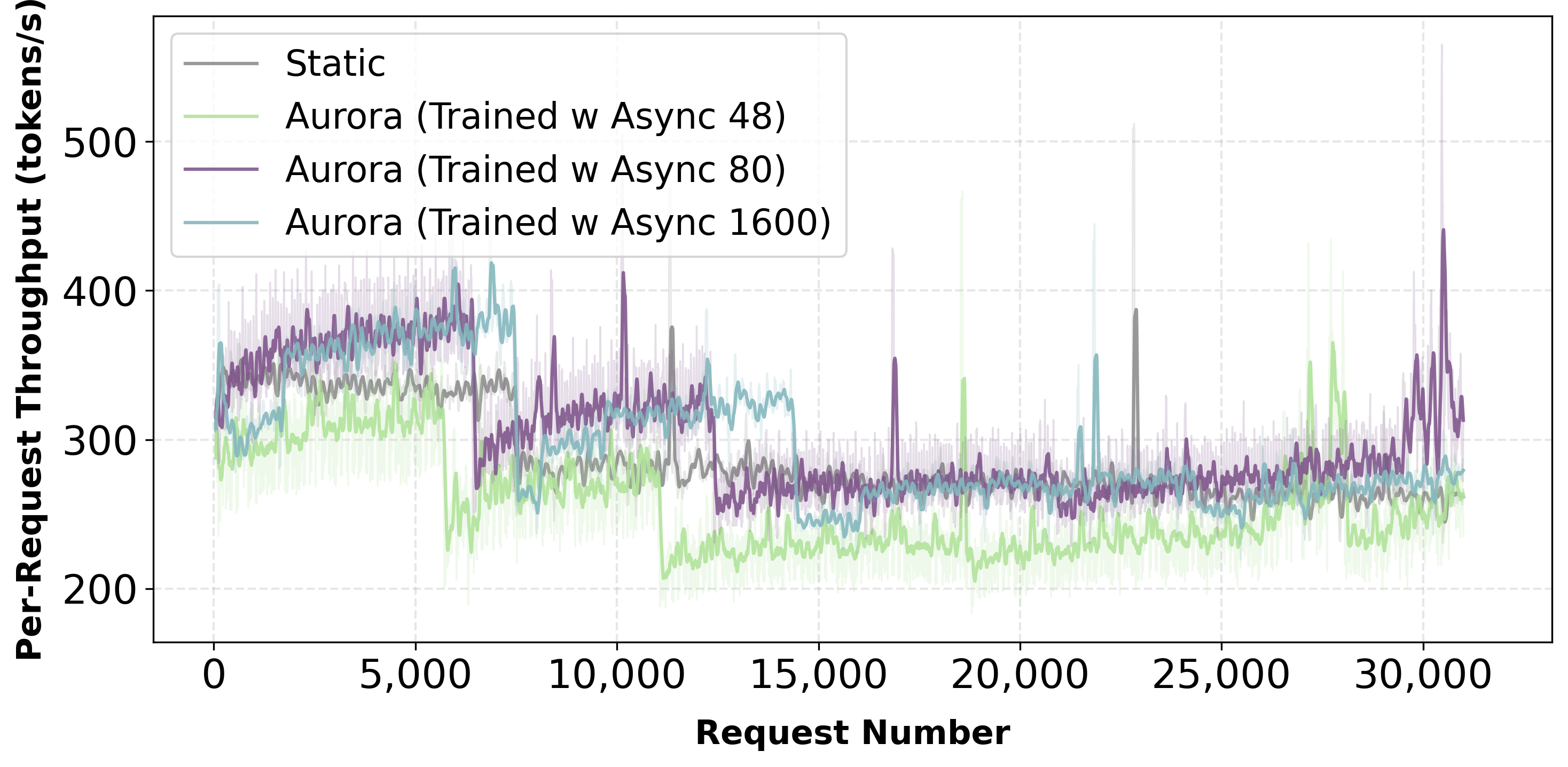

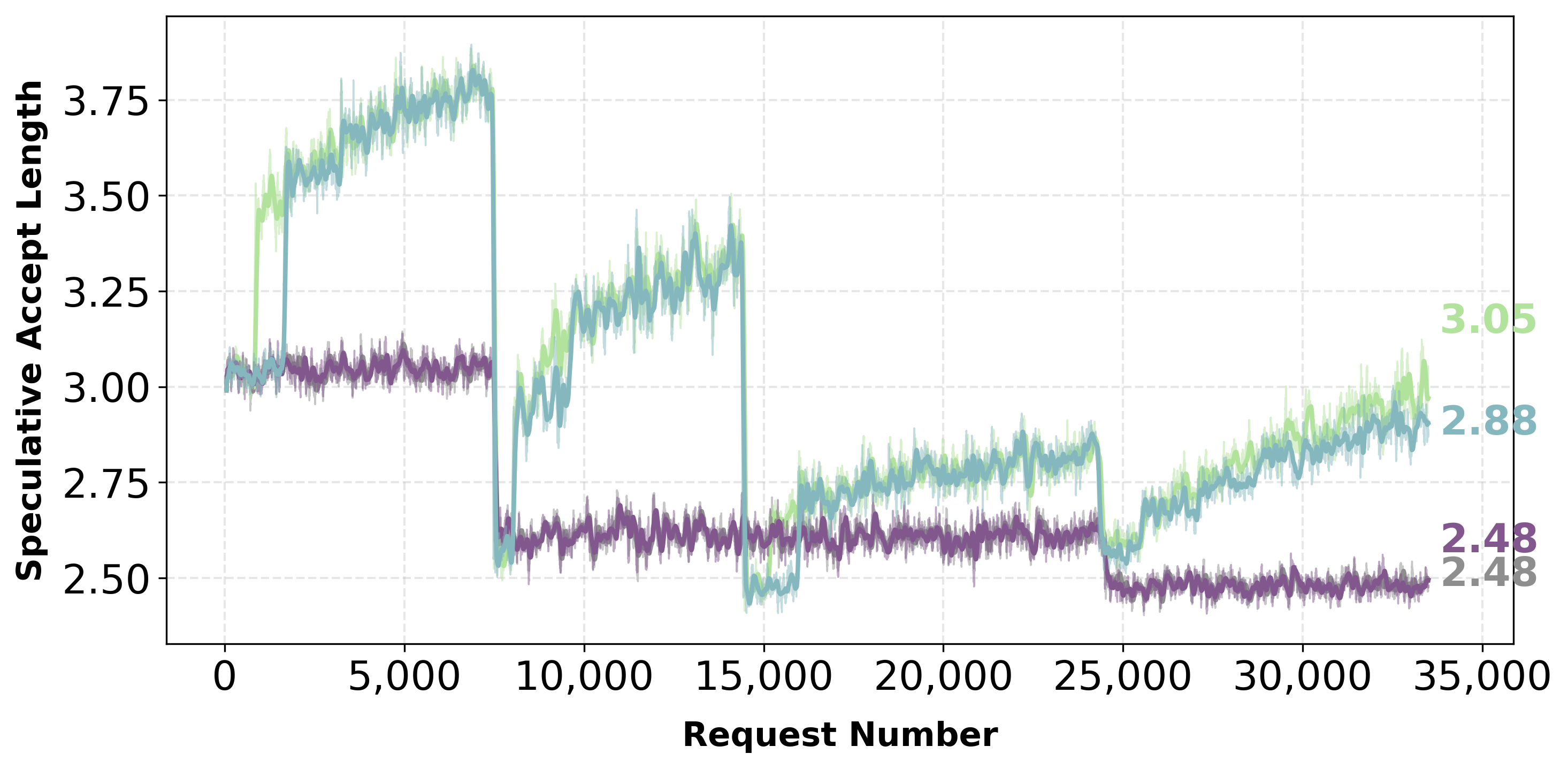

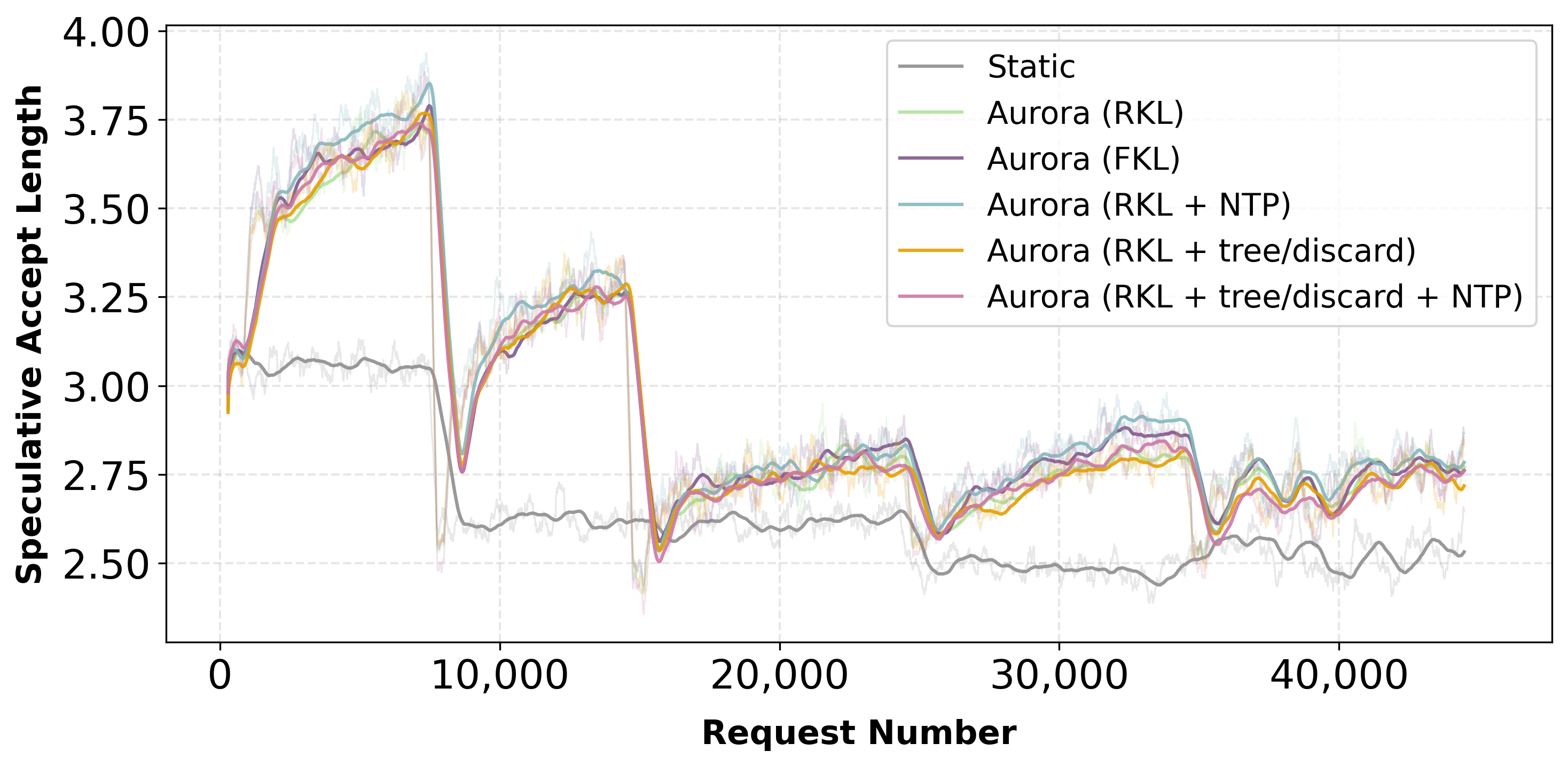

Adaptation to Distribution Shift

When requests are grouped by domain to induce abrupt distribution changes, Aurora adapts continuously. The system recovers acceptance length within approximately 10,000 requests after each shift.

Aurora adapts to domain shifts, recovering performance

Throughput maintains competitiveness despite domain shifts

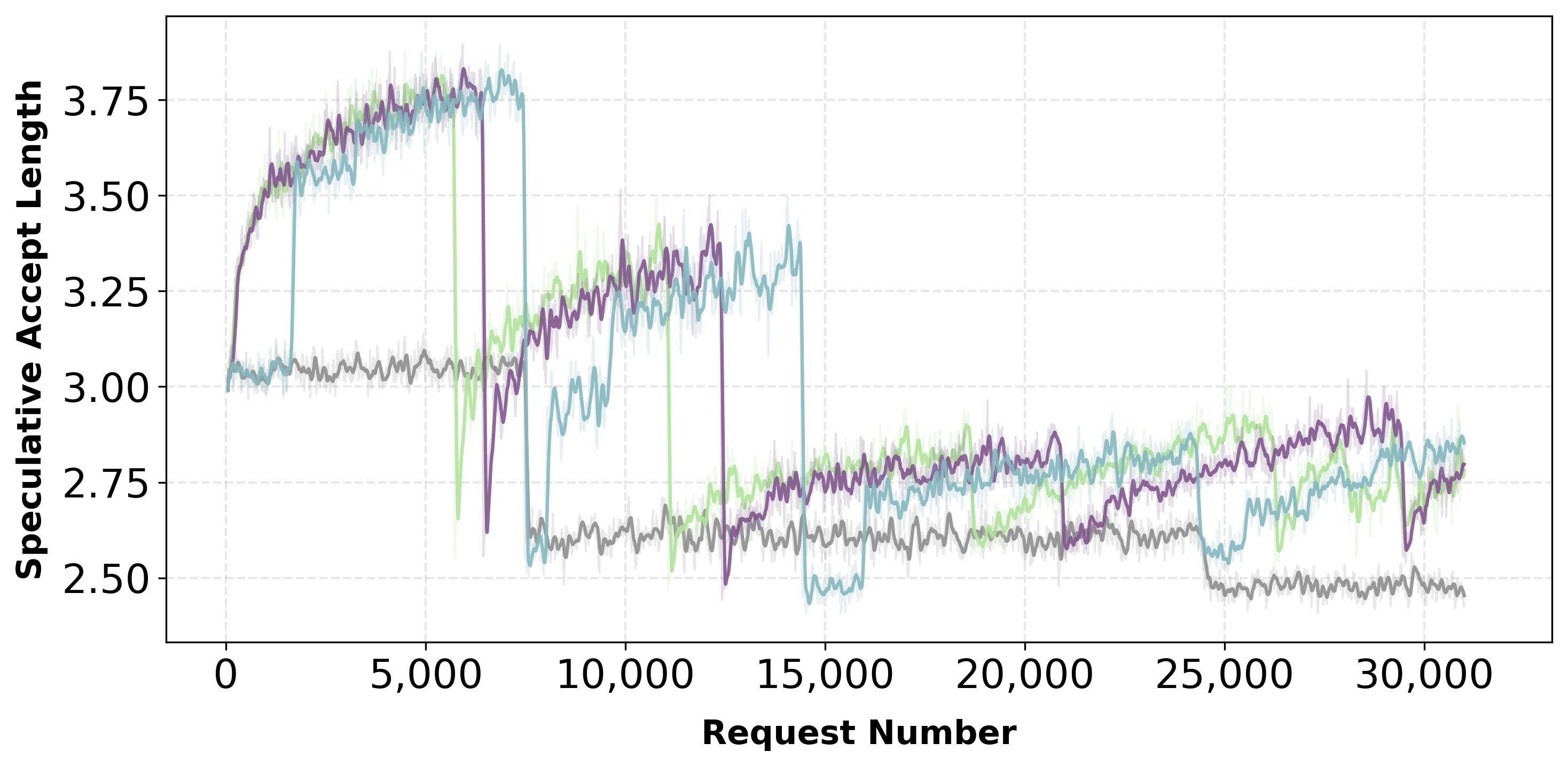

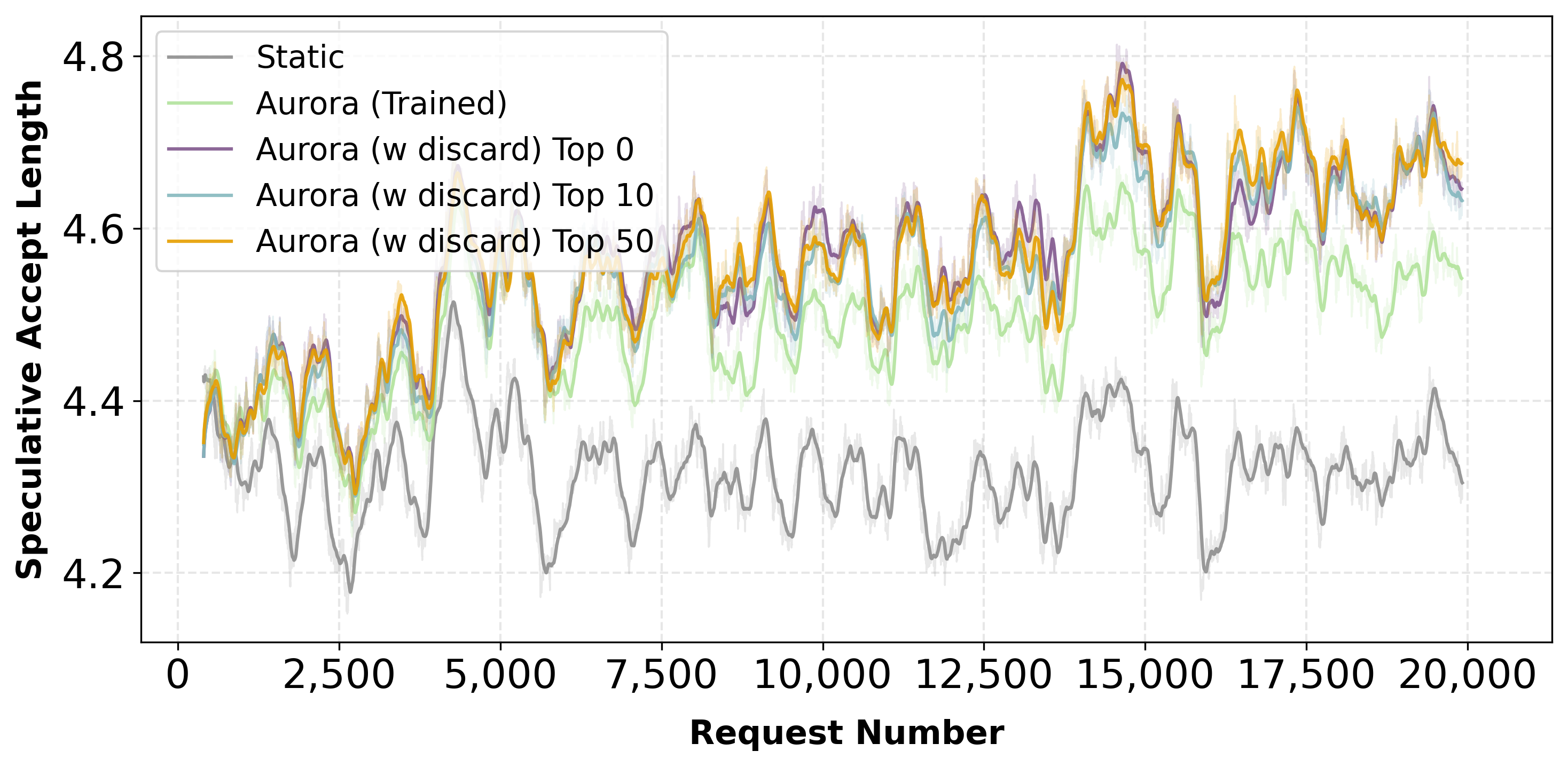

Training with Existing Speculator

Starting from a trained speculator, Aurora achieves 1.48× speedup over the static baseline through continuous adaptation.

Pre-trained speculator under domain shift

1.48× speedup over static speculator

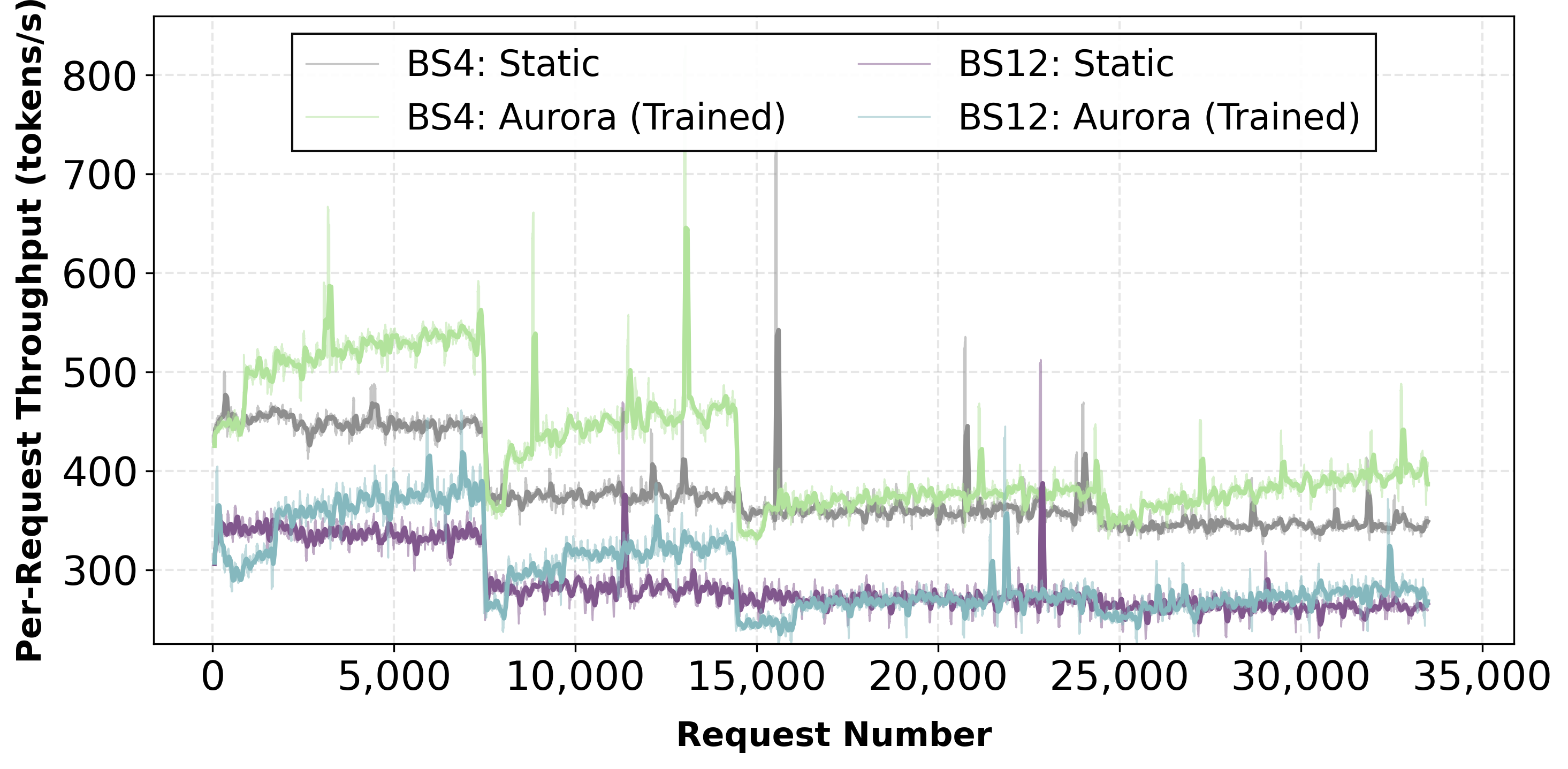

Batch size ablation: acceptance length

Batch size ablation: throughput

Coding Domain Performance

Aurora also demonstrates strong performance on coding-specific workloads across different model families.

Coding benchmark results (Qwen3-8B)

Coding benchmark results (LLaMA 3.1-8B)

Coding domain acceptance trend

Why Aurora?

Traditional speculative decoding suffers from training-serving mismatch. Aurora solves this with a unified, continuously adaptive system.

Key Finding: Online training from scratch can exceed the performance of a carefully pretrained speculator, fundamentally challenging the conventional wisdom that speculative decoding requires extensive offline pretraining.

Conclusion

We presented Aurora, a unified training-serving system that reframes speculative decoding as a joint learning-and-serving problem. By connecting an SGLang-based inference server with an asynchronous training server via GPU-aware RPC, Aurora enables continuous on-policy adaptation of the draft model under live traffic, closing the training-serving mismatch that limits conventional two-stage pipelines.

Our experiments show that simple online fine-tuning captures most attainable gains, that lazy synchronization best balances adaptation speed with serving stability, and that day-0 deployment from scratch is practical—an untrained speculator reaches competitive acceptance rates within thousands of requests, eliminating the offline pretraining bottleneck for onboarding new models.

BibTeX

@article{aurora2026,

title={When RL Meets Adaptive Speculative Training: A Unified Training-Serving System},

author={Bie, Fengxiang and Wang, Junxiong and Li, Jisen and Zhou, Zhongzhu and Xu, Chenfeng and Shao, Zelei and Wang, Yubo and Liu, Yinghui and Wu, Qingyang and May, Avner and Athiwaratkun, Ben and Zhang, Yineng and Song, Shuaiwen Leon and Wu, Xiaoxia},

journal={International Conference on Machine Learning (ICML)},

year={2026},

note={Under review},

url={https://aurora-spec.github.io}

}